Blocking link referral spam with nginx

Over at the official website for the At Least You're Trying Podcast, I've been seeing lots of weird Russian domains appear in the analytics reports. Some of the domains are Darodar.com, Blackhatworth.com, and Hulfingtonpost.com.

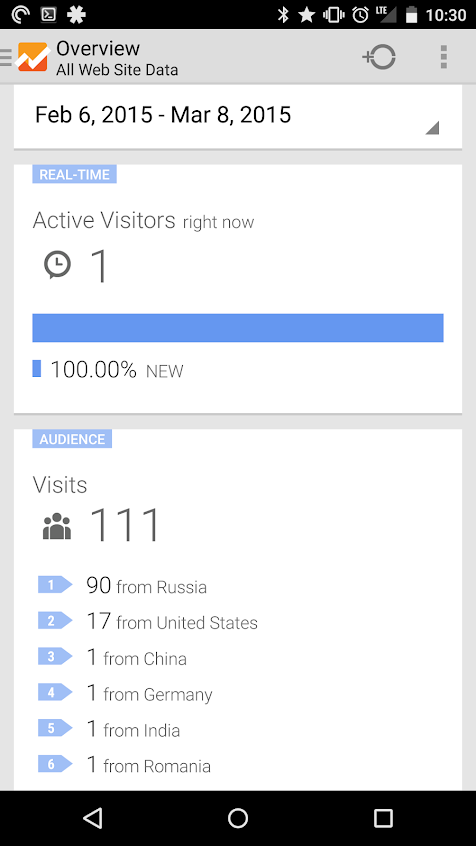

Have a look at the large contingent of Russian visits (yes, I am vain enough to check on my meager internet fame from my phone):

My understanding of this phenomenon is incomplete.

I think it goes like this:

- Spam robot crawls my website

- Spammer links to my website

- {insert marketing-speak involving black-hat SEO here}

- Massive profit for spammer

I've gone around in circles trying to decide how to handle this issue. Visits from the spam crawlers aren't costing much data transfer at the moment, I'm nowhere near my limit over at Linode, but they are swamping any real traffic in my analytics reports.

I was finally bothered enough to research my options.

My initial thought was to add iptables firewall rules since that would block the requests even before they pass into the webserver (nginx). I didn't want to filter on IP address since these crawlers are likely coming from multiple IP's and I don't want to spend time poring over my server logs to track them down. That left me with blocking by domain, and I found this nice article covering the topic. After looking into the some of the syntax used in the post, I started to get the feeling that iptables might not be the right tool to handle this sort of fuzzy string matching. Also, iptables rule sytax makes me cross-eyed and even though I only have a handful of domains to block now, I don't want to be writing new firewall rules for every spam domain.

I briefly wondered if I could do something creative with fail2ban, which is already installed on the box. But, fail2ban apparently translates it's block list into iptables firewall rules, so it would be added complexity on top of a tool I was hoping to avoid.

I was trying to get closer to the metal than the web server, but nginx is quite good at string matching, so I decided it was the better choice. After much internet searching, I finally came across a nice gist showing an nginx configuration file:

I liked the syntax, but there's no setup context and I wasn't sure if this extra configuration file should be referenced in the main nginx.conf file or had to be included in each of the separate server configuration files. I tried to set it up both ways but got errors when restarting nginx. Apparently the map directive can't be used in either of those ways.

The technique used here also looked promising (and it didn't throw errors when restarting nginx).

In the end, I went with the basic pattern as described by the second link, but took a few pointers from the first method. I created a new nginx configuration directory and file, /etc/nginx/global-server-conf/bad_referer.conf, which contains:

if (

$http_referer ~ "(

semalt\.com

|buttons-for-website\com

|blackhatworth\.com

|hulfingtonpost\.com

|darodar\.com

|cenoval\.ru

|priceg\.com

)"

) {

set $bad_referer "1";

}

if ($bad_referer) {

return 444;

}

I feel like the 444 status code was the right choice for the return value:

Then within each site's server configuration file, I added the line:

include /etc/nginx/global-server-conf/*;

In my bad_referer.conf file, I listed all of the sketchy domains, except one. I skipped bestwebsitesawards.com for now since it wasn't generating much traffic, and I wanted to have something to use as a "control group".

In a month or so, I'll update this post with another screen shot to prove that I've kept the spam robots at bay, or show that I've failed miserably.

2015-03-11 update:

I still have some more work to do.

Due to the still large Russian numbers, I've added o-o-6-o-o.com and humanorightswatch.org to my block list.

Maybe this traffic will never quite go away...